通过LogStash收集nginx日志

参考: https://medium.com/devops-programming/b01bd0876e82

?

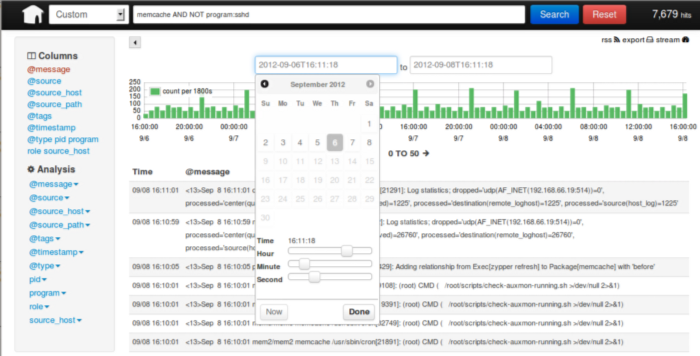

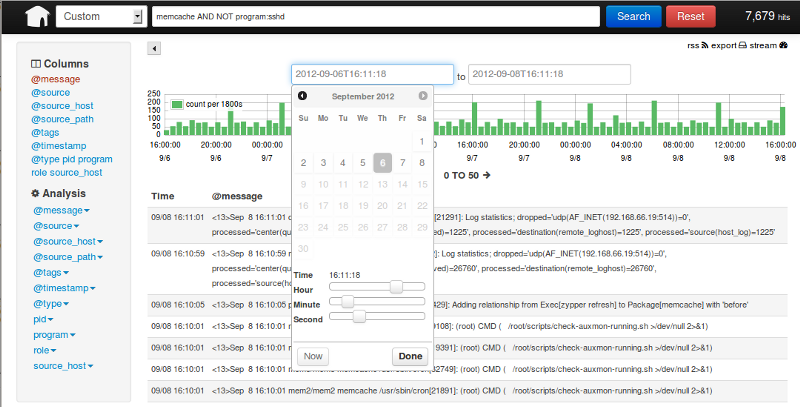

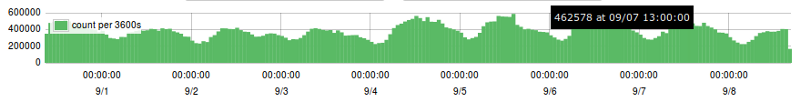

KIBANA WEB INTERFACE

KIBANA WEB INTERFACE Commando.io?in?DevOps & Programming

Commando.io?in?DevOps & ProgrammingAt?Commando.io, we’ve always wanted a web interface to allow us to grep and filter through our?nginx?access logs in a friendly manner. After researching a bit, we decided to go with?LogStash?and use?Kibana?as the web front-end for?ElasticSearch.

LogStash is a free and open source tool for managing events and logs. You can use it to collect logs, parse them, and store them for later.

First, let’s setup our centralized log server. This server will listen for events using?Redis?as a broker and send the events to ElasticSearch.

The following guide assumes that you are running?CentOS 6.4 x64.

cd $HOME

# Get ElasticSearch 0.9.1, add as a service, and autostartsudo yum -y install java-1.7.0-openjdkwget https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-0.90.1.zipunzip elasticsearch-0.90.1.ziprm -rf elasticsearch-0.90.1.zipmv elasticsearch-0.90.1 elasticsearchsudo mv elasticsearch /usr/local/sharecd /usr/local/sharesudo chmod 755 elasticsearchcd $HOMEcurl -L http://github.com/elasticsearch/elasticsearch-servicewrapper/tarball/master | tar -xzsudo mv *servicewrapper*/service /usr/local/share/elasticsearch/bin/rm -Rf *servicewrapper*sudo /usr/local/share/elasticsearch/bin/service/elasticsearch installsudo service elasticsearch startsudo chkconfig elasticsearch on

# Add the required prerequisite remi yum repositorysudo rpm —import http://rpms.famillecollet.com/RPM-GPG-KEY-remisudo rpm -Uvh http://rpms.famillecollet.com/enterprise/remi-release-6.rpmsed -i ‘0,/enabled=0/s//enabled=1/’ /etc/yum.repos.d/remi.repo

# Install Redis and autostartsudo yum -y install redissudo service redis startsudo chkconfig redis on

# Install LogStashwget http://logstash.objects.dreamhost.com/release/logstash-1.1.13-flatjar.jarsudo mkdir —-parents /usr/local/bin/logstashsudo mv logstash-1.1.13-flatjar.jar /usr/local/bin/logstash/logstash.jar

# Create LogStash configuration filecd /etcsudo touch logstash.conf

Use the following LogStash configuration for the centralized server:

# Contents of /etc/logstash.conf

input { redis { host => “127.0.0.1" port => 6379 type => “redis-input” data_type => “list” key => “logstash” format => “json_event” }}output { elasticsearch { host => “127.0.0.1" }}Finally, let’s start LogStash on the centralized server:

/usr/bin/java -jar /usr/local/bin/logstash/logstash.jar agent —config /etc/logstash.conf -w 1

In production, you’ll most likely want to setup a service for LogStash instead of starting it manually each time. The following?init.d service script?should do the trick?(it is what we use).

Woo Hoo, if you’ve made it this far, give yourself a big round of applause. Maybe grab a frosty adult beverage.

Now, let’s setup each nginx web server.

cd $HOME

# Install Javasudo yum -y install java-1.7.0-openjdk

# Install LogStashwget http://logstash.objects.dreamhost.com/release/logstash-1.1.13-flatjar.jarsudo mkdir —-parents /usr/local/bin/logstashsudo mv logstash-1.1.13-flatjar.jar /usr/local/bin/logstash/logstash.jar

# Create LogStash configuration filecd /etcsudo touch logstash.conf

Use the following LogStash configuration for each nginx server:

# Contents of /etc/logstash.conf

input { file { type => “nginx_access” path => [“/var/log/nginx/**”] exclude => [“*.gz”, “error.*”] discover_interval => 10 }} filter { grok { type => nginx_access pattern => “%{COMBINEDAPACHELOG}” }} output { redis { host => “hostname-of-centralized-log-server” data_type => “list” key => “logstash” }}Start LogStash on each nginx server:

/usr/bin/java -jar /usr/local/bin/logstash/logstash.jar agent —config /etc/logstash.conf -w 2

At this point, you’ve got your nginx web servers shipping their access logs to a centralized log server via Redis. The centralized log server is churning away, processing the events from Redis and storing them into ElasticSearch.

All that is left is to setup a web interface to interact with the data in ElasticSearch. The clear choice for this is Kibana. Even though LogStash comes with its own web interface, it is highly recommended to use Kibana instead. In-fact, the folks that maintain LogStash recommend Kibana and are going to be deprecating their web interface in the near future. Moral of the story… Use Kibana.

On your?centralized log server, get and install Kibana.

cd $HOME

# Install Rubyyum -y install ruby

# Install Kibanawget https://github.com/rashidkpc/Kibana/archive/v0.2.0.zipunzip v0.2.0rm -rf v0.2.0sudo mv Kibana-0.2.0 /srv/kibana

# Edit Kibana configuration filecd /srv/kibanasudo nano KibanaConfig.rb# Set Elasticsearch = “localhost:9200"sudo gem install bundlersudo bundle install

# Start Kibanaruby kibana.rb

Simply open up your browser and navigate to?http://hostname-of-centralized-log-server:5601?and you should see the Kibana interface load right up.

Lastly, just like for ElasticSearch, you’ll probably want Kibana to run as a service and autostart. Again, here is our?init.d service script?that we use.

Congratulations, your now shipping your nginx access logs like a boss to ElasticSearch and using the Kibana web interface to grep and filter them.

Interested in automating this entire install ofElasticSearch, Redis,LogStash?and?Kibana?on your infrastructure??We can help!?Commando.io?is a web based interface for managing servers and running remote executions over SSH.?Request a beta invite?today, and start managing servers easily online.