XML笔记(Performance Testing)

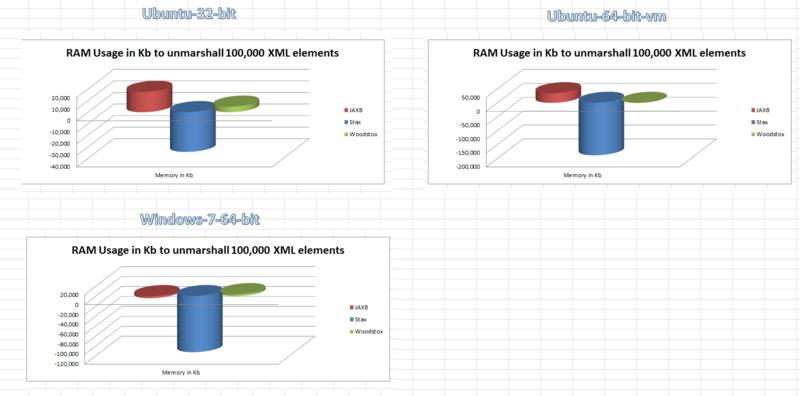

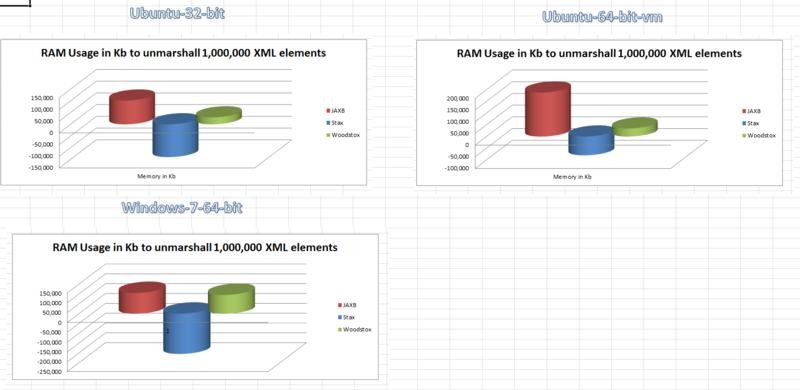

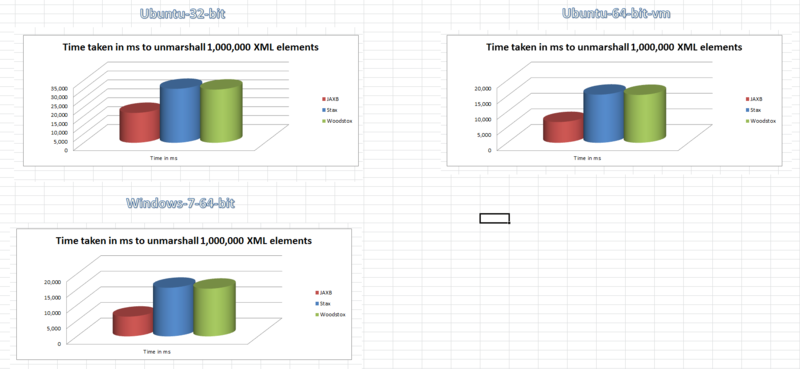

本文转自http://java.dzone.com/articles/xml-unmarshalling-benchmark,主要比较了JAXB/STAX1.0/Woodstox在解析多节点XML文件时内存和时间使用上的性能差异,遗憾的是缺少CPU使用的对比图。

?

?

XML unmarshalling benchmark in Java: JAXB vs STax vs Woodstox

?

?

?

Towards the end of last week I started thinking how to deal with large amounts of XML data in a resource-friendly way.The main problem that I wanted to solve was how to process large XML files in chunks while at the same time providing upstream/downstream systems with some data to process.

Of course I've been using JAXB technology for few years now; the main advantage of using JAXB is the quick time-to-market; if one possesses an XML schema, there are tools out there to auto-generate the corresponding Java domain model classes automatically (Eclipse Indigo, Maven jaxb plugins in various sauces, ant tasks, to name a few). The JAXB API then offers a Marshaller and an Unmarshaller to write/read XML data, mapping the Java domain model.

When thinking of JAXB as solution for my problem I suddendlly realised that JAXB keeps the whole objectification of the XML schema in memory, so the obvious question was: "How would our infrastructure cope with large XML files (e.g. in my case with a number of elements > 100,000) if we were to use JAXB?". I could have simply produced a large XML file, then a client for it and find out about memory consumption.

As one probably knows there are mainly two approaches to processing XML data in Java: DOM and SAX. With DOM, the XML document is represented into memory as a tree; DOM is useful if one needs cherry-pick access to the tree nodes or if one needs to write brief XML documents. On the other side of the spectrum there is SAX, an event-driven technology, where the whole document is parsed one XML element at the time, and for each XML significative event, callbacks are "pushed" to a Java client which then deals with them (such as START_DOCUMENT, START_ELEMENT, END_ELEMENT, etc). Since SAX does not bring the whole document into memory but it applies a cursor like approach to XML processing it does not consume huge amounts of memory. The drawback with SAX is that it processes the whole document start to finish; this might not be necessarily what one wants for large XML documents. In my scenario, for instance, I'd like to be able to pass to downstream systems XML elements as they are available, but at the same time maybe I'd like to pass only 100 elements at the time, implementing some sort of pagination solution. DOM seems too demanding from a memory-consumption point of view, whereas SAX seems to coarse-grained for my needs.

I remembered reading something about STax, a Java technology which offered a middle ground between the capability to pull XML elements (as opposed to pushing XML elements, e.g. SAX) while being RAM-friendly. I then looked into the technology and decided that STax was probably the compromise I was looking for; however I wanted to keep the easy programming model offered by JAXB, so I really needed a combination of the two. While investigating STax, I came across Woodstox; this open source project promises to be a faster XML parser than many othrers, so I decided to include it in my benchmark as well. I now had all elements to create a benchmark to give me memory consumption and processing speed metrics when processing large XML documents.

The benchmark planIn order to create a benchmark I needed to do the following:

Create an XML schema which defined my domain model. This would be the input for JAXB to create the Java domain modelCreate three large XML files representing the model, with 10,000 / 100,000 / 1,000,000 elements respectivelyHave a pure JAXB client which would unmarshall the large XML files completely in memoryHave a STax/JAXB client which would combine the low-memory consumption of SAX technologies with the ease of programming model offered by JAXBHave a Woodstox/JAXB client with the same characteristics of the STax/JAXB client (in few words I just wanted to change the underlying parser and see if I could obtain any performance boost)Record both memory consumption and speed of processing (e.g. how quickly would each solution make XML chunks available in memory as JAXB domain model classes)Make the results available graphically, since, as we know, one picture tells one thousands words. The Domain Model XML Schema??Just few things to notice about this pom.xml.

I use Java 6, since starting from version 6, Java contains all the XML libraries for JAXB, DOM, SAX and STax. To auto-generate the domain model classes from the XSD schema, I used the excellent maven-jaxb2-plugin, which allows, amongst other things, to obtain POJOs with toString, equals and hashcode support.I have also declared the jar plugin, to create an executable jar for the benchmark and the assembly plugin to distribute an executable version of the benchmark. The code for the benchmark is attached to this post, so if you want to build it and run it yourself, just unzip the project file, open a command line and run:

$ mvn clean install assembly:assembly

This command will place *-bin.* files into the folder target/site/downloads. Unzip the one of your preference and to run the benchmark use (-Dcreate.xml=true will generate the XML files. Don't pass it if you have these files already, e.g. after the first run):

$ java -jar -Dcreate.xml=true large-xml-parser-1.0.0-SNAPSHOT.jar

Creating the test dataTo create the test data, I used PODAM, a Java tool to auto-fill POJOs and JavaBeans with data. The code is as simple as:

??The code uses a one-liner to unmarshall each XML file:

JAXB + STaxWith STax, I just had to use an XMLStreamReader, iterate through all < person> elements, and pass each in turn to JAXB to unmarshall it into a PersonType domain model object. The code follows:

?

??Summary for 100,000 XML elements

The results on all three different environments, although with some differences, all tell us the same story:

If you are looking for performance (e.g. XML unmarshalling speed), choose JAXBIf you are looking for low-memory usage (and are ready to sacrifice some performance speed), then use STax.My personal opinion is also that I wouldn't go for Woodstox, but I'd choose either JAXB (if I needed processing power and could afford the RAM) or STax (if I didn't need top speed and was low on infrastructure resources). Both these technologies are Java standards and part of the JDK starting from Java 6.

ResourcesBenchmarker source codeZip version: Download Large-xml-parser-1.0.0-SNAPSHOT-projecttar.gz version: Download Large-xml-parser-1.0.0-SNAPSHOT-project.tartar.bz2 version: Download Large-xml-parser-1.0.0-SNAPSHOT-project.tarBenchmarker executables:Zip version: Download Large-xml-parser-1.0.0-SNAPSHOT-bintar.gz version: Download Large-xml-parser-1.0.0-SNAPSHOT-bin.tartar.bz2 version: Download Large-xml-parser-1.0.0-SNAPSHOT-bin.tarData files:Ubuntu 64-bit VM running environment: Download Stax-vs-jaxb-ubuntu-64-vmUbuntu 32-bit running environment: Download Stax-vs-jaxb-ubuntu-32-bitWindows 7 Ultimate running environment: Download Stax-vs-jaxb-windows7?

From http://tedone.typepad.com/blog/2011/06/unmarshalling-benchmark-in-java-jaxb-vs-stax-vs-woodstox.html