ubuntu11.04上cloudera cdh3u0的hadoop跟hbase分布式安装

ubuntu11.04上cloudera cdh3u0的hadoop和hbase分布式安装?JAVA_HOME/usr/local/jdk1.6.0_24JRE_HOME$JAV

ubuntu11.04上cloudera cdh3u0的hadoop和hbase分布式安装

?

JAVA_HOME=/usr/local/jdk1.6.0_24JRE_HOME=$JAVA_HOME/jreCLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATHHADOOP_HOME=/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0HBASE_HOME=/home/hadoop/cdh3/hbase-0.90.1-cdh3u0PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin:$PATHexport JAVA_HOME JRE_HOME CLASSPATH HADOOP_HOME HBASE_HOME PATH

在229-231上修改/etc/profile

添加

?

JAVA_HOME=/usr/local/jdk1.6.0_24ZOOKEEPER_HOME=/home/hadoop/cdh3/zookeeper-3.3.3-cdh3u0PATH=$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$ZOOKEEPER_HOME/conf:$PATHexport JAVA_HOME ZOOKEEPER_HOME PATH

ssh免密码登录

用hadoop用户登录所有机器,在/home/hadoop/下建立.ssh目录

运行

# ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

这样会在~/.ssh/生成两个文件:id_dsa 和id_dsa.pub。

# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

拷贝authorized_keys到222-232

# scp /home/hadoop/.ssh/authorized_keys hadoop@192.168.0.222:/home/hadoop/.ssh/

修改hosts文件

# sudo vim /etc/hosts

修改所有机器的/etc/hosts文件为

?

127.0.0.1 localhost192.168.0.221 ubuntu-1192.168.0.222 ubuntu-2192.168.0.223 ubuntu-3192.168.0.224 ubuntu-4192.168.0.225 ubuntu-5192.168.0.226 ubuntu-6192.168.0.227 ubuntu-7192.168.0.228 ubuntu-8192.168.0.229 ubuntu-9192.168.0.230 ubuntu-10192.168.0.231 ubuntu-11192.168.0.232 ubuntu-12

从221登录221-232,用hostname,第一次需要确认,以后就可以直接登录。

# ssh ubuntu-1

# ssh ubuntu-2

# ssh ubuntu-3

# ssh ubuntu-4

# ssh ubuntu-5

# ssh ubuntu-6

# ssh ubuntu-7

# ssh ubuntu-8

# ssh ubuntu-9

# ssh ubuntu-10

# ssh ubuntu-11

# ssh ubuntu-12

3、安装hadoop

在221和232上创建/data

# sudo mkdir /data

# sudo chown hadoop /data

在222和228上创建/disk1,/disk2,/disk3

# sudo mkdir /disk1

# sudo mkdir /disk2

# sudo mkdir /disk3

# sudo chown hadoop /disk1

# sudo chown hadoop /disk2

# sudo chown hadoop /disk3

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/hadoop-env.sh添加

?

export JAVA_HOME=/usr/local/jdk1.6.0_24

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/core-site.xml添加

?

<property> <name>hadoop.tmp.dir</name> <value>/data</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://ubuntu-1:9000/</value> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property>

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/hdfs-site.xml添加

?

<property> <name>dfs.name.dir</name> <value>/home/hadoop/data</value> </property> <property> <name>dfs.data.dir</name> <value>/disk1,/disk2,/disk3</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property>

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/mapred-site.xml添加

?

<property> <name>mapred.job.tracker</name> <value>ubuntu-1:9001</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task. </description> </property>

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/masters添加

?

ubuntu-12

修改/home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/conf/slaves添加

?

ubuntu-2ubuntu-3ubuntu-4ubuntu-5ubuntu-6ubuntu-7ubuntu-8

拷贝221的hadoop到222-228,232

# scp -r /home/hadoop/cdh3/hadoop-0.20.2-cdh3u0/ hadoop@192.168.0.222:/home/hadoop/cdh3/

格式化hadoop文件系统

# hadoop namenode -format

启动hadoop,在221上运行

# start-all.sh

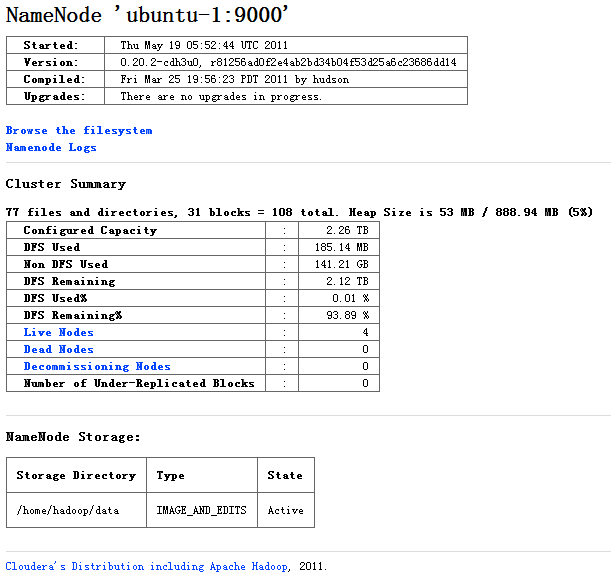

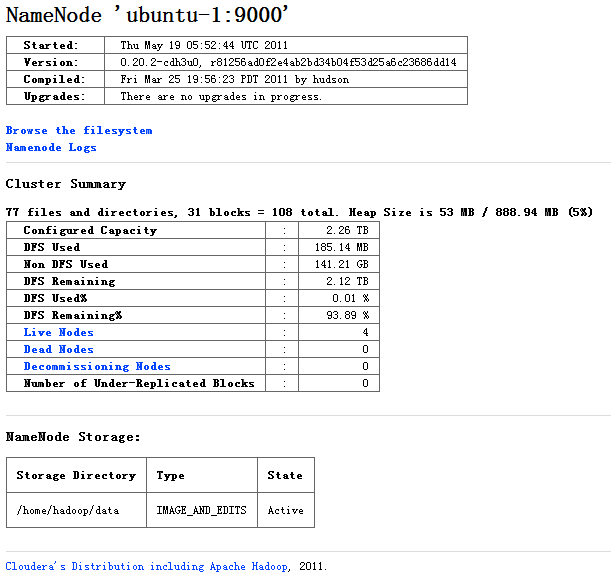

查看集群状态:http://192.168.0.221:50070/dfshealth.jsp

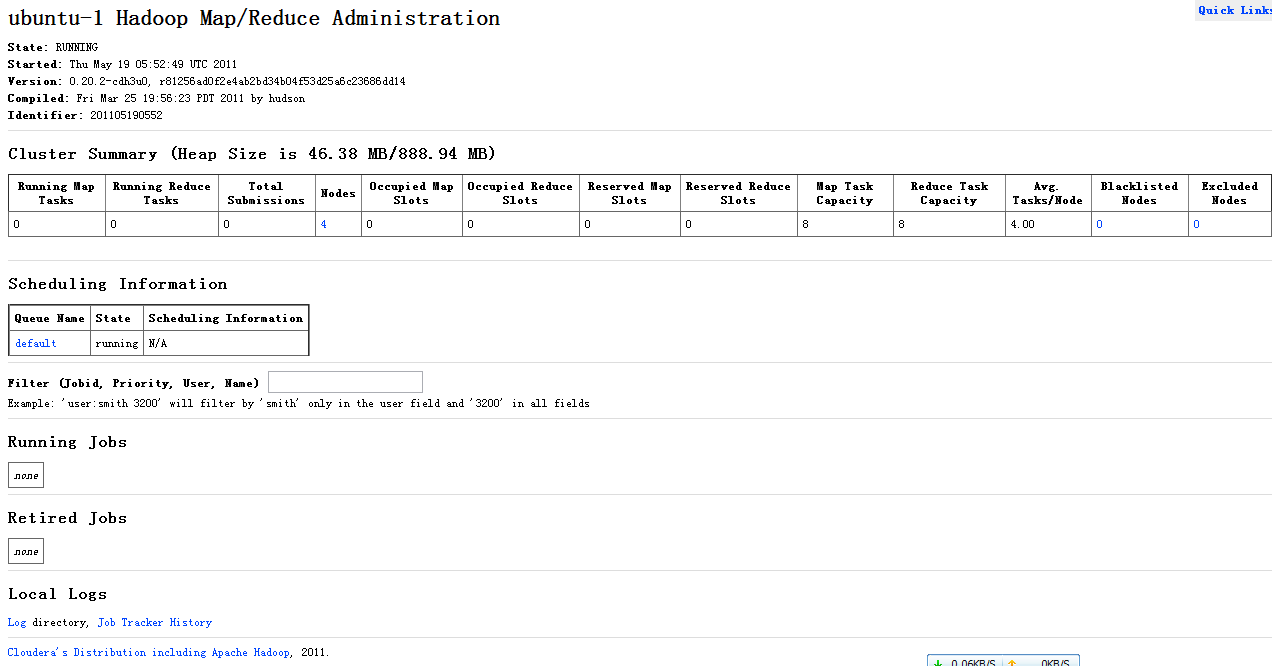

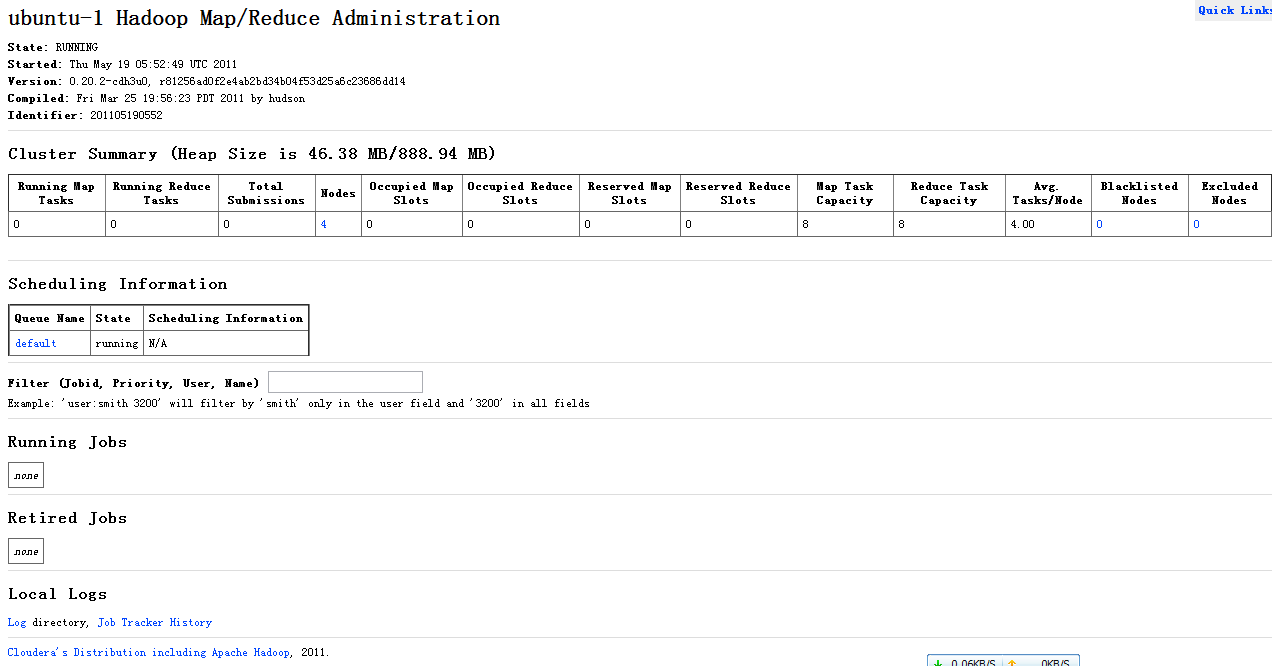

查看JOB状态:http://192.168.0.221:50030/jobtracker.jsp

4、安装zookeeper

在229-231上创建/home/hadoop/zookeeperdata目录

修改229的/home/hadoop/cdh3/zookeeper-3.3.3-cdh3u0/conf/zoo.cfg

?

# The number of milliseconds of each ticktickTime=2000# The number of ticks that the initial # synchronization phase can takeinitLimit=10# The number of ticks that can pass between # sending a request and getting an acknowledgementsyncLimit=5# the directory where the snapshot is stored.dataDir=/home/hadoop/zookeeperdata# the port at which the clients will connectclientPort=2181server.1=ubuntu-9:2888:3888server.2=ubuntu-10:2888:3888server.3=ubuntu-11:2888:3888

拷贝229的hadoop到230,231

# scp -r /home/hadoop/cdh3/zookeeper-3.3.3-cdh3u0/ hadoop@192.168.0.232:/home/hadoop/cdh3/

在229,230,231的/home/hadoop/zookeeperdata目录下建myid文件,内容分别为1,2,3

启动zookeeper,在229-231上分别执行

# zkServer.sh start

启动后可以使用

# zkServer.sh status

查看状态

5、安装hbase

在221上修改/home/hadoop/cdh3/hbase-0.90.1-cdh3u0/conf/hbase-env.sh添加

?

export JAVA_HOME=/usr/local/jdk1.6.0_24export HBASE_MANAGES_ZK=false

在221上修改/home/hadoop/cdh3/hbase-0.90.1-cdh3u0/conf/hbase-site.xml添加

?

<property> <name>hbase.rootdir</name> <value>hdfs://ubuntu-1:9000/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.master.port</name> <value>60000</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>ubuntu-9,ubuntu-10,ubuntu-11</value> </property>

在221上修改/home/hadoop/cdh3/hbase-0.90.1-cdh3u0/conf/regionservers添加

?

ubuntu-2ubuntu-3ubuntu-4ubuntu-5ubuntu-6ubuntu-7ubuntu-8

拷贝221的hbase到222-228,232

# scp -r /home/hadoop/cdh3/hbase-0.90.1-cdh3u0/ hadoop@192.168.0.222:/home/hadoop/cdh3/

启动hbase

在221上执行

# start-hbase.sh

启动hbase的第二个HMaster

在232上执行

# hbase-daemon.sh start master

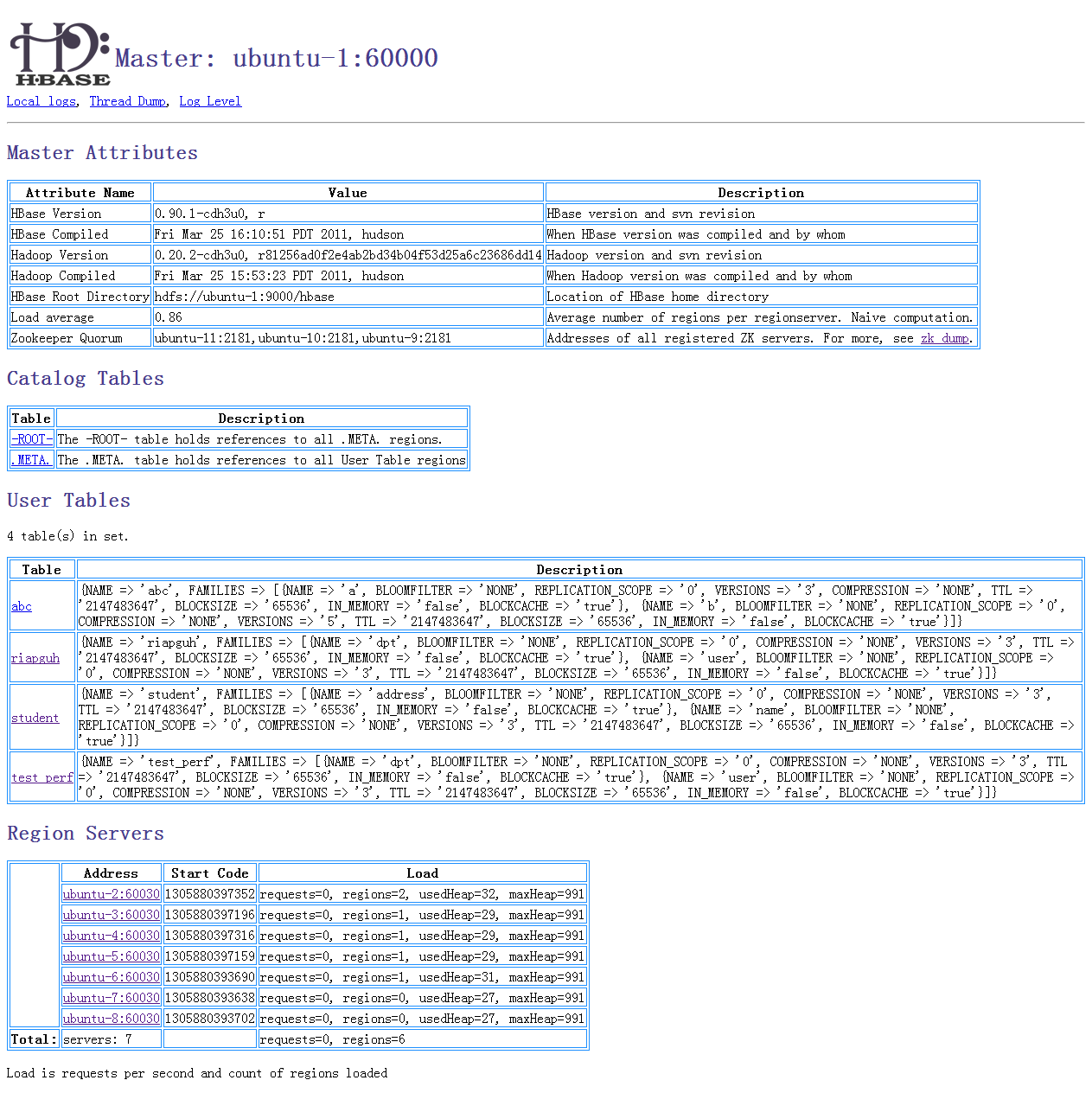

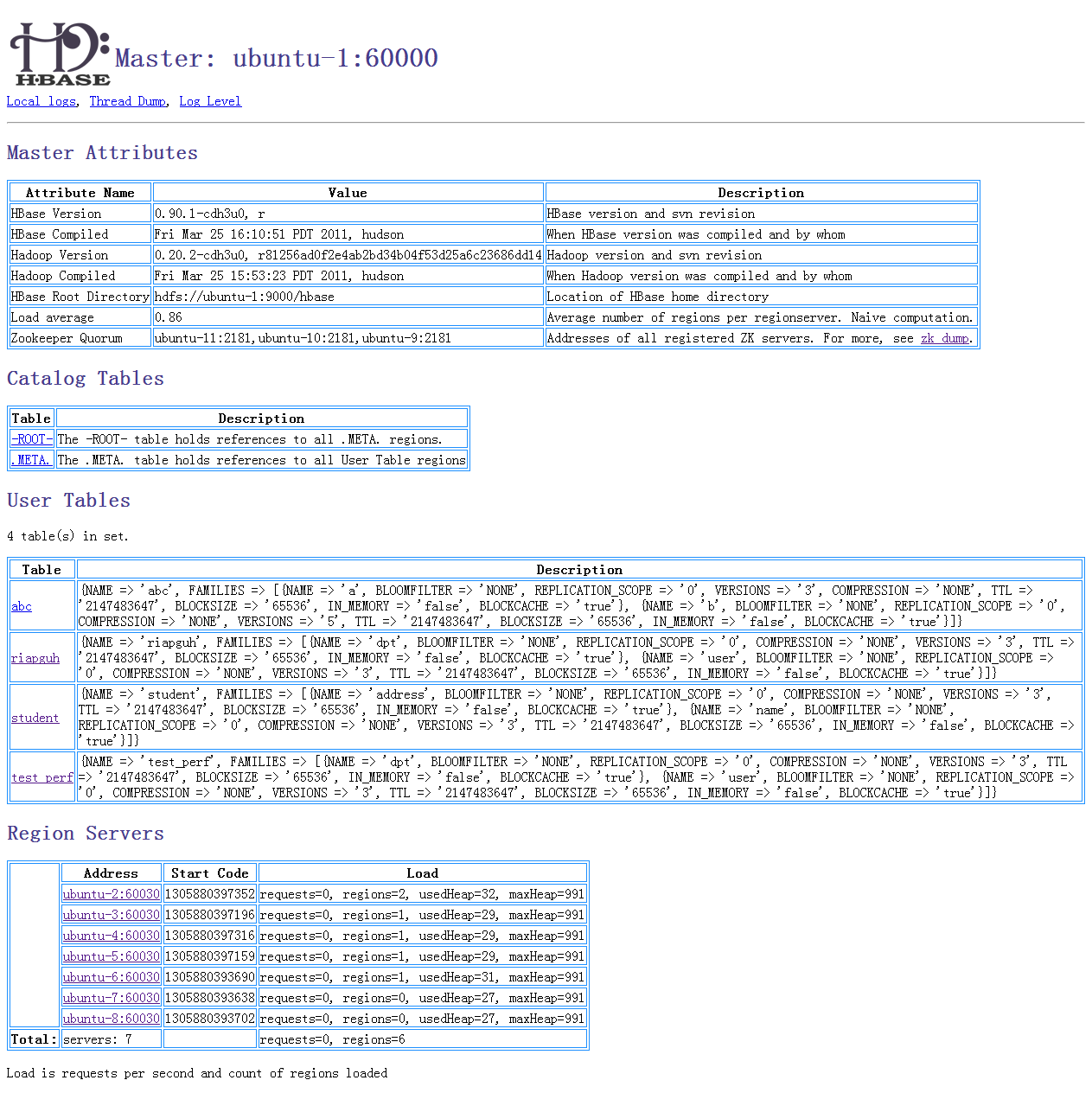

查看Master:http://192.168.0.221:60010/master.jsp

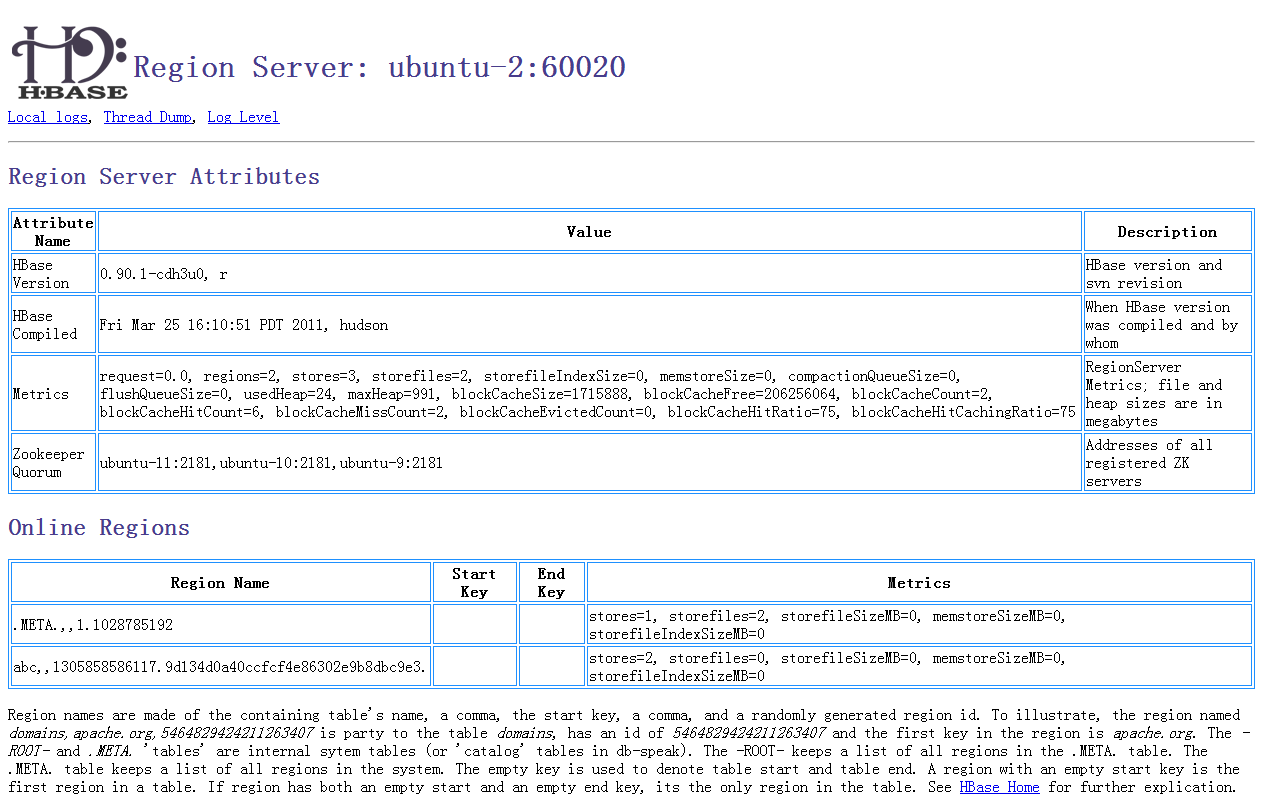

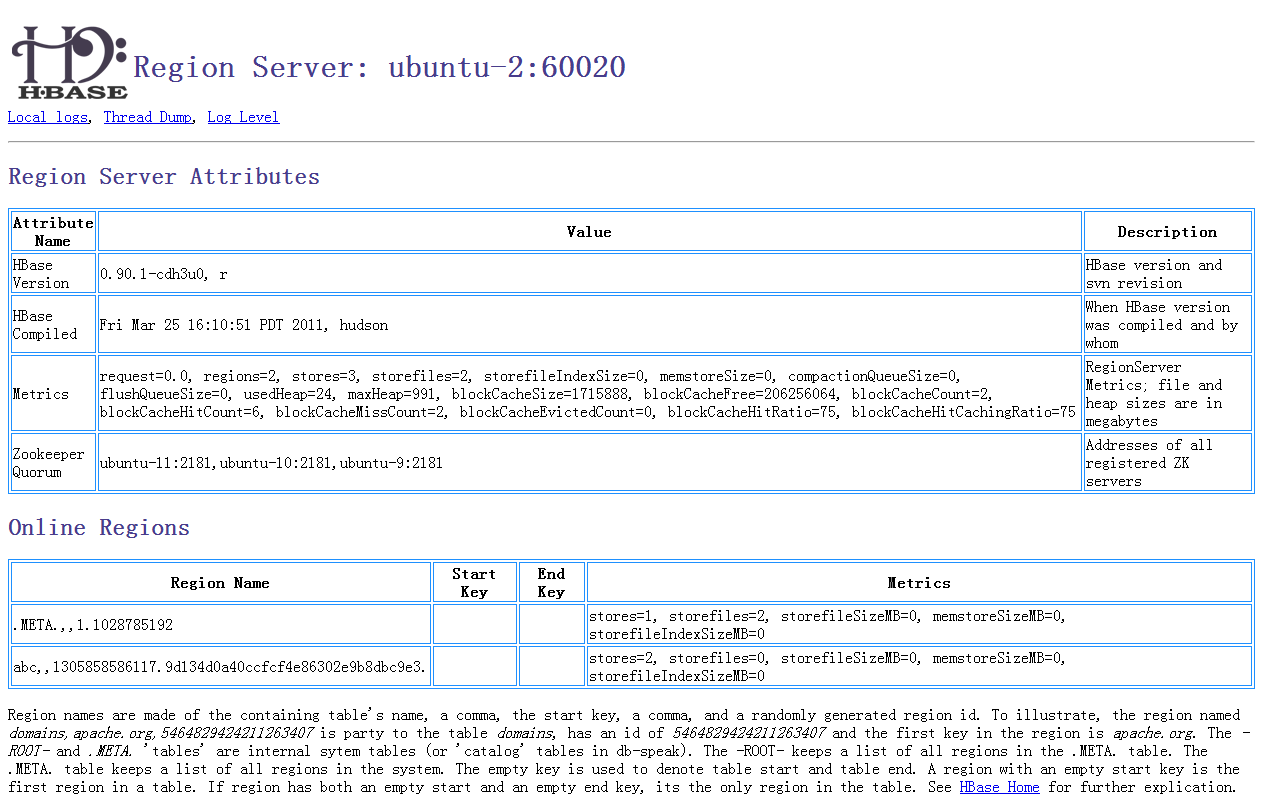

查看Region Server:http://192.168.0.222:60030/regionserver.jsp

查看ZK:http://192.168.0.221:60010/zk.jsp

6、说明

jps查看启动进程

221

?

JobTrackerNameNodeHMaster

222-228

?

HRegionServerDataNodeTaskTracker

229-231

?

QuorumPeerMain

232

?

SecondaryNameNodeHMaster

启动顺序

1.hadoop

2.zookeeper

3.hbase

4.第二个HMaster

停止顺序

1.第二个HMaster, kill-9删除

2.hbase

3.zookeeper

4.hadoop